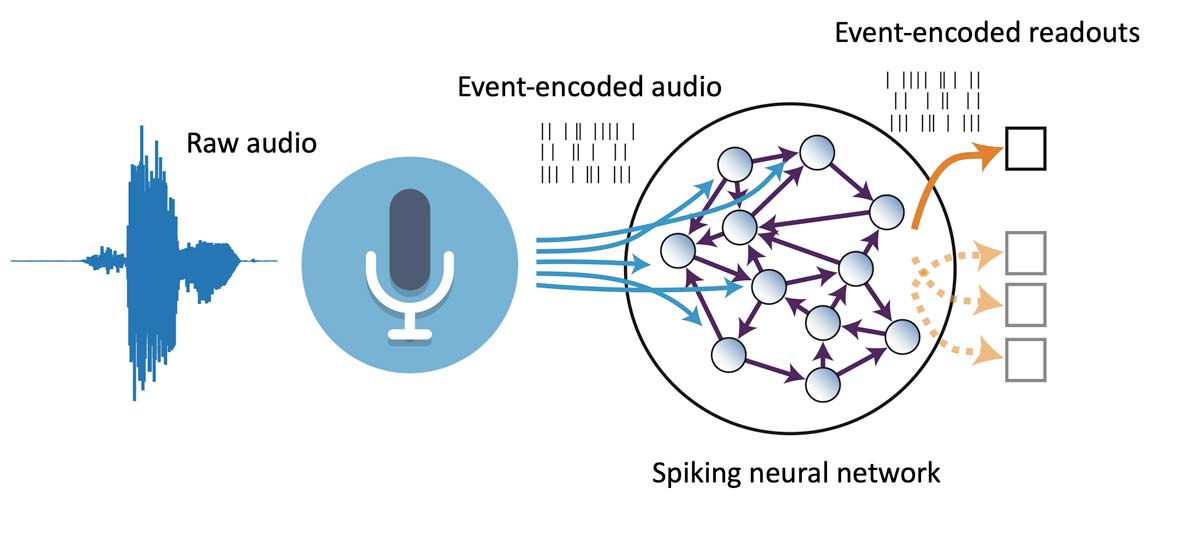

Four use cases for consumer auditory processing are addressed below:

Continuous audio scene classification:

Audio headsets, phones, hearing aids and other portable audio devices often use noise reduction or sound shaping to improve listening performance for the user. The parameters used for noise reduction may depend on the noise level and characteristics surrounding the device and user. To choose from and steer pre-configured noise reduction approaches, this use-case classifies the noise environment surrounding the user automatically and continuously. It is a continuous temporal signal monitoring application, with weak low-latency requirements (environments change on the scale of minutes), but hard low-energy requirements (portable audio devices are almost uniformly battery-powered).

Audio event detection:

Smart home speakers can assist in home monitoring for security and health purposes. This use case is a continuous audio monitoring use case, where distinct classes of audio events such as glass break (for security purposes), distress call (for health monitoring purposes), baby cry, dog bark, etc. are recognised. For a smart home monitoring application, the latency requirements are not strict (<10s), but the false positive rate must be low. Confounding situations such as television soundtracks must be considered. Low-energy requirements are present for efficiency, but smart home speakers are usually tethered devices.

Multi-microphone auditory processing:

Smart home speakers must detect spoken audio commands with high sensitivity and accuracy. In most conditions spoken audio is considered “far-field” audio input, complicated by reverberation from surroundings, and mixed with external noise sources as well as other speakers. The use of multiple microphones in an array is a common approach for assisting in noise reduction. Signals from the various microphones can be mixed with varying phase offsets, to improve the signal-to-noise ratio for audio sources at selected locations in space and reduce the contributions from confounding sources and reflections. By shifting signals to match phases and adding, the amplitude of a chosen audio sources is increased, while the amplitude of confounding sources is averaged away. This process is often called “Reverse Beamforming”. In this use case, an assistive component of reverse beamforming is defined and implemented through a low-power sensory processing task designed for multiple simultaneous input channels (i.e., a microphone array), to assist in noise reduction in smart home devices.

Voice Activity Detection:

Monitoring of audio scene for detection of voiced speech which acts as a wake-up signal for smart devices.

Smart devices nowadays come equipped with digital voice assistants that allow for control of the device via speech commands. To facilitate this functionality, the device needs to detect and understand the speech commands provided by the user. In terms of power consumption, it is not desirable to always have the complete speech recognition system active. To reach this goal, it is desired that an energy efficient detection system is always active that can trigger the complete system to be active when a speech signal is detected. The aim in the use-case is to develop a voice activity detector (VAD) whose output acts as a wake-up signal for the complete speech recognition system. The developed VAD is aimed to work in dynamic and practical acoustic environments.

Currently, mainly devices that come equipped with powerful processors, e.g., smart phones, smart speakers etc., have voice control functionalities. The implementation of VAD with neuromorphic computing can help in bringing this functionality to a broader segment of consumer devices with varying computation and energy profiles. Additionally, the developed VAD can also be used to drive the functionalities on other low-power devices such as hearing aids and headphones. The latency requirements on the method are not stringent, however, the power consumption of the method needs to be low as the method would be deployed on a device.

Synsense, in collaboration with UZH, STGNB and FhG, works to develop four innovative use cases for consumer auditory processing applications with very low power consumption and based on a neuromorphic processor.